|

CMP /

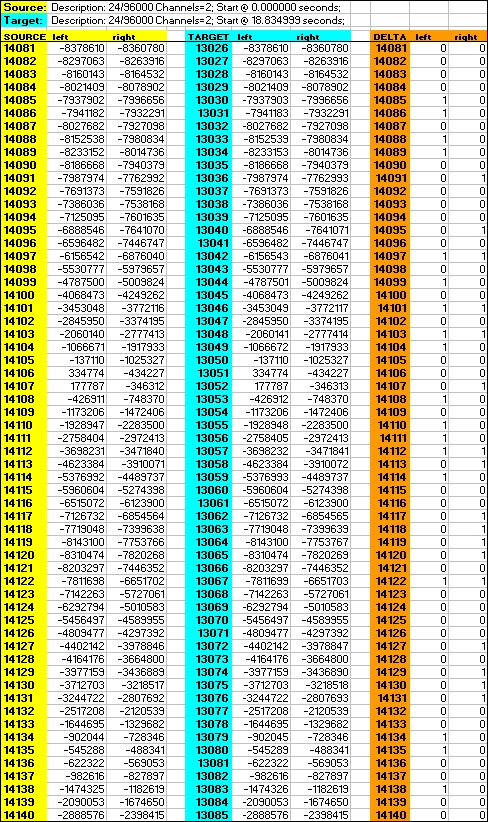

04BitPerfectChapter 4 - Is Windows XP audio ‘Bit Perfect’?  A music track played on one computer was output via SPDIF to a second computer which recorded the incoming data. The two ‘tracks’ were passed into wav format, time-aligned, converted to numeric values and compared in a spreadsheet. This snapshot of samples out of 64,000 consecutive samples shows the copy to be ‘bit perfect’ except for the LSB of about half the samples. These errors are almost certainly caused by rounding errors in the recording process, not in copying. It is sometimes claimed that the operating system corrupts music data: the result reported here suggests otherwise. The test design linked two computers with the second one emulating a DAC. The ‘source’ was a Computer Transport configured as described herein and fitted with an RME HDSP 9652 soundcard set up to provide the master clock. Music data were passed via SPDIF to the ‘target’ computer, which was fitted with an ESI Juli@ soundcard whose clock was set to external. ASIO 2 drivers were used in both machines. A two-metre cable with poor-quality connectors and no shielding was deliberately chosen. The incoming data were recorded on the second machine and the two datasets compared. The test procedure

Note: Source computer converts audio data from 16/44.1k to 32/96k which is dithered back to 24 bits by the RME soundcard for S/PDIF transmission. Analysing the resultUsing foobar2000’s foo_convert.dll, the original 16 bit wav track as ripped from CD was converted to a 24/96k wav file. This copy track (the 'Source' file) had been processed by Foobar2000, passed over a poor SPDIF link and recorded by Cubase. Using cicsWave, the two files were converted to numeric data, copied into a spreadsheet and time aligned. The table shows samples out of the 64,000 samples analysed with the difference between samples displayed on the right. It is apparent that these differences occur always and only in the least significant bit (LSB) and that for under 50 per cent of samples. They can be attributed to either the Cubase volume control which, even set to zero, may cause rounding errors or dithering done by the RME soundcard (from 32 to 24 bit conversion, i.e. divide by 256 or drop the least significant 8 bits). The remaining 23 bits were invariably copied without error. The same is true for all of the 64,000 samples analysed. An impressive result considering that a 16 bit wav file was upsampled in realtime to 32/96k and transmitted (using 24/96k S/PDIF). This represents a real-world audio playback scenario. In short, excluding the rounding error, the samples are identical. If errors were caused by data corruption in the copying process rather than as an artefact of recording, the ‘mangled’ bits would tend to appear randomly, not just in the LSB. As a result, markedly different amplitudes would have been observed. |